For the last few years I’ve been working on mapping out social relationships in cities with my project peoplemaps.org. I had the chance to speak about this work recently at the TED Global conference, and the talk was featured this week on the ted.com website. This has led to many, many inquiries about the project, how it works, and the limits of its applicability.

So I wanted to take an opportunity to explain in high-level terms how I’m doing this work, what it tells us, and what it does not. I’ll attempt here to cover the approach in enough detail so others can reproduce it, without going into deep implementation details which are frankly not important to understanding the concepts. However, I’m happy to collaborate with anyone who would like to discuss implementations.

Theoretical Foundation

In the real world, each of us has personal relationships: our family, friends, co-workers, people we interact with in social settings, children and their parents and friends, and the like. The conventional wisdom is that we can maintain roughly 150 real-world relationships — this is called the “Dunbar Number,” named after the anthropologist who identified this phenomenon. Some people may have more, some people less, but 150 is probably average.

In an online setting, people may have many more relationships — perhaps a few thousand that they interact with in some meaningful way. However, the offline, real-world relationships that people have will likely be an overlapping subset of the relationships they have in an online setting. Online relationships are often more flexible (they can be global and operate at all times of day), though not always as meaningful. Still, if you are able to look at people’s online relationships in a specific geography, you have at least a proxy for understanding their offline, real-world relationships as well.

The ability to infer things about both offline and online interactions is derived from the principle of homophily, which sociologists identified as the powerful tendency for people to cluster into groups of people who are similar to themselves. Colloquially we know this through the saying, “birds of a feather flock together,” and it is powerfully demonstrated in network data.

So if we accept the notion that people do, in fact, have relationships that both shape and are shaped by their interactions, then it follows that there may be some ways to measure these relationships with at least some level of fidelity. Social network data appears to offer at least a window into these real-world relationships, though each dataset has biases which are not yet well understood. However, by comparing results from different networks, we can start to get a sense for what those biases might be.

This is where the state of the art for this research is right now: trying to understand how these different data sets are biased, the nature of those biases, and whether the biases are material in terms of distorting the “real world” network we are trying to understand. However, this lack of perfect understanding isn’t preventing people from using this data to do all kinds of things: from recommending movies to helping you find “people you may know” to identifying terror cells using cell-phone “metadata.” All of these activities use essentially the same approach.

These Maps Are NOT Geographic

A quick caveat: while we typically think of city maps as geographic, these maps are explicitly NOT geographic in nature. Rather, they are showing communities and their relationships to each other. The position of communities relative to the page is always arbitrary, their position relative to each other is determined by the presence or lack of relationships between them. I bring this up now to dissuade any notion you might have that these maps are geographic, despite whatever resemblance (real or imagined) they might have to geography. This all said, there are ways to tie these maps back to geography and use it as an additional investigative tool, but you should assume that all discussion of maps here is non-geographic, unless otherwise noted.

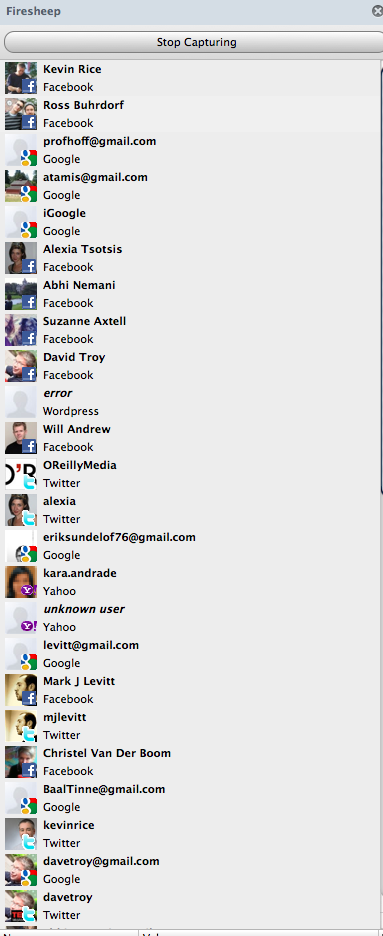

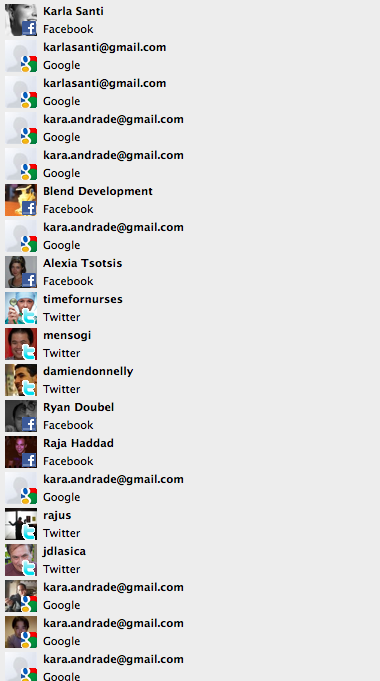

Gathering the Data — and Avoiding Bieber Holes

Depending on the dataset you are looking to explore, the exact details of how you gather data will vary somewhat; I have used data from Twitter, Facebook, LinkedIn, AngelList, email, and other sources. In all cases, you will want to gather a set of “nodes” (people, users, or companies, depending on the data source) and “edges” (relationships between them — typically “friend” or “follow” relationships.)

For the case of the city network maps, this is the approach I have used with Twitter data:

- Define a target geography using a geographic bounding box.

- Determine a set of keywords and location names that users inside the target geography may use to identify their location/geography.

- Identify a set of “seed” users within the bounding box, or which otherwise appear to verifiably be within the target geography.

- Determine which user identifiers are followed by a given user, and record that in a list that maintains the number of followers for a given user identifier.

- When a given user identifier is followed by a number of people exceeding a threshold, request the full user information for that user to see if it appears to be within the target geography.

- If that user is within the target geography, then feed it into step 4, requesting the user identifiers followed by that user.

- Repeat steps 4-7 until there are few new additions to the dataset.

Doing this process once produces a “first draft” data set, which can then be visualized for inspection — to look for improper inclusions, obvious exclusions, and any particular data artifacts or pathologies.

At this stage, various problems may appear. As an example, if you are trying to visualize Birmingham UK, you will likely end up with some data for Birmingham, Alabama, due to legitimate confusion which may exist between the two communities. At this point, you can modify the test used to determine whether something is inside the target or not, and regenerate the dataset, as well as perform additional data gathering iterations to get more of the “right” data. This process typically takes a few iterations to really drill down into the data you’re looking for.

One persistent problem I’ve come across is a phenomenon called Bieber Holes, which are essentially regions of the network occupied by Justin Bieber fans. They are so virulent, and their networks so dense, that only aggressive exclusion filters can prevent the algorithm from diving down into these holes and unearthing millions of Beliebers — only a fraction of which may pass location tests. Anyway, I’ve developed good techniques to avoid Bieber holes (and similar phenomena) but it’s a reminder that when working with data from the public with algorithmic approaches, editorial discretion is required.

Laying out the Network Graph

There are dozens of algorithms for laying out network graph data, each optimized to illustrate different properties of the graph. Since I’m primarily interested in homophily and clustering, I’m looking for layouts that express communities of relationships. A good way to do that is to use a force-directed graph layout algorithm; with this approach, relationships act like springs (expressing Hooke’s law), and each user or node repels nearby nodes (expressing Coulomb’s law). By iteratively drawing the graph based on these forces, the graph will eventually reach a steady state which exhibits the following properties:

- People with many relationships between them will be arranged into tight clusters.

- People with the fewest relationships between them will appear at opposite edges of the graph.

- People who have many relationships at both ends of the graph will appear in the middle.

- Clusters with few or no relationships between them will appear very far apart on the graph.

You can think of this in very simple terms. If you have a room full of 10 people, there can be a total of 45 relationships between them (n * (n-1)/2, since someone is not friends with themselves). If every person is friends with every other person, this network will appear as a perfect and symmetric “ball” under the rules of a force directed graph layout.

Likewise, let’s suppose that same room of 10 people was grouped into two groups of 5 people, and that those two groups hated each other and refused to speak, but each member of each group knows every other member of their own group. You would see two perfect “ball” layouts (each with 10 relationships expressed), but with no connection between them.

When we visualize data from a city in this way, we are essentially measuring the separateness of communities — whether we see several of these separate groups or whether we see one unified community.

Note that in the example with 10 people in one group, we have a total of 45 relationships, while in the example with two groups of 5, there are only 20 (2 x 10). Scaling that up to a city of 500,000 people, if the city was fully meshed, there would be 124,999,750,000 total relationships, while if that city is segregated into two groups of 250,000, there can only be 31,249,875,000 relationships in each group, or 62,499,750,000 total relationships across both groups, which is a little less than half of the number of relationships possible if the two groups merged.

Detecting Communities and Adding Color

Within the network, we can use algorithms to detect distinct communities. Communities are defined by the number of shared relationships within a given subgroup. We use an algorithm called the Louvain community detection algorithm, which iteratively determines communities of interest within the larger network, and assigns a community membership to each user accordingly.

We can then assign each of these assigned communities a color. For my work so far, I have assigned these colors arbitrarily, with the only goal of visually differentiating one community from another. This helps to generate an aesthetically pleasing visual representation.

While communities often correlate to clustering exhibited by the layout algorithm, for nodes that are not clearly members of only one cluster, color can be used to indicate their primary community affiliation. This is helpful in the visualization because generates blended color fields that can give a sense of the boundary between two communities.

For example, a group primarily concerned with politics (blue) and a group primarily concerned by music (yellow) may have a mix of both blue and yellow nodes in the space between those distinct communities, and you can get a sense that those people are very interested in both communities and which their primary affiliation might be.

Assigning Node Size with In-Degree

In a graph of Twitter users, it can be helpful to indicate how many people are following a given user within our specific graph (note that this is calculated for our subgraph, not taken from a user’s “global” follower count as displayed by Twitter.)

We can do that by making the “dot” associated with each user bigger or smaller based on the number of followers. This has no real effect on the shape of the network, but it can be helpful in determining what kinds of users are where, and how people have organized themselves into communities of interest.

Determining Community Interests

After you have a colored graph with communities and clusters, it’s time to try to figure out what these clusters seem to be around. The first thing to do is to start by manually inspecting nodes to see who they are — often starting with the biggest nodes first. Typically you’ll find that people often organize themselves into groups like these: sports, music, mainstream media, politics, food, technology, arts, books, culture, and the like. These clusterings vary somewhat from city to city, but you’ll see some common patterns between cities.

After communities are detected, we can start to monitor traffic coming out of these communities to look for topics of conversation and other characteristics which may be helpful in explicating the observed clustering. For instance, we can gather a corpus of Twitter traffic for each community, recording:

- hashtags

- commonly shared links

- languages in use

- operating systems in use (desktop vs. mobile et al)

- client software in use

- geographic coordinates (as provided in GPS)

- age of user accounts

- user mentions

With this kind of data recorded as a histogram for each community, we can start to get a pretty good sense that a given group is mostly concerned with sports, music, politics, and the like. By working with a collaborator who is well-versed with the culture of a given place, we can also get a sense of local subtleties that might not be immediately obvious to an outside observer. These insights can be used to generate legends for a final graph product, and other editorial content.

Investigating Race

Another phenomenon is very apparent in American cities: people separate by race. American cities like Baltimore and St. Louis are polarized into black and white communities, with some people bridging in the middle. These cities present roughly as eccentric polygons, with differences in mesh density at each end of a racially polarized spectrum. Relatively prosperous European cities, like Munich or Barcelona, present more like “balls,” with no clear majority/minority tension displayed. Istanbul, by contrast, shows a strong divide between the rich, establishment and a large emergent cohort of frustrated young men.

In many American cities, we do observe strong racial homophily in the data. For example, in the data for a city like Baltimore, people at opposite ends of the spectrum are generally strongly identifiable as “very white” or “very black.” This is a touchy subject, and it’s difficult to discuss this topic without offending people; however, what we are aiming to do here is to try to understand what the data is showing us, not make generalizations or prescriptive measures about race.

One way to ground this discussion about race is to look at the profile photos associated with user accounts. We can gather these photos in bulk, and when displaying them together, it becomes quickly clear that people have often organized themselves around skin color. When they have not organized themselves around skin color, they are organized around other cultural signifiers like fashion or style. This can give us a concrete sense that certain communities consist primarily of one race or another. However, this is not to suggest that outliers do not exist, or to make any statement either about any given individual — and certainly does not suggest an inverse relationship between race and the ability or proclivity to participate in any given cluster or clusters.

The Final Product

Once we have refined the data, identified communities, investigated trends and topics within communities, and potentially looked at photos, profiles, and other cultural signifiers, we are in a position to annotate the network map with editorial legends. This is a fundamentally human process. I generally use a tool like Photoshop or Keynote to annotate an image, but this could be done in a number of ways. Once this step is done, a final product can be exported.

This entire process of taking data from one or more biased sources, refining data, and then using a human editor who also has a bias produces a final end product which is comparable to an “op-ed” in a newspaper: it’s an expression of one possible mental model of the world, informed by a combination of facts, errors, and pre-existing opinions. It’s up to the reader to determine its utility, but to the extent it offers a novel view and the reader deems the data and editorial biases acceptable, such renderings can be an informative lens through which to see a city.

A Note About Bias, Inclusions, and Exclusions

A common criticism of large-scale social network analysis is that it is not representative because it is biased in some way. A typical statement is, “I don’t use Twitter, so the results don’t include me, therefore I question the validity of the approach.” Likewise, people may say the same thing about LinkedIn or Facebook, or start into a long explanation of how their online habits are very different from their offline reality. These are important facts to consider, but the question is really whether network analysis is representative enough to start to deliver information that we didn’t have until now.

To answer this, it’s helpful to think about this in terms of recent history. In 2004 or 2007, it probably wasn’t helpful to say anything in particular about data from social networks, because none of them had enough penetration to deliver insights beyond a very biased community of early adopters: whether it was geeks in the case of Twitter, or young music fans in the case of MySpace, or college students in the case of Facebook.

As these networks have continued to evolve, however, their penetration is increasing rapidly. They are also accreting a great deal of historical data about people and their position in social networks which give us clues as to their offline interactions. This accretion of data will only continue and begin to paint a more complete and multi-dimensional picture of our culture — especially if you correlate the data from multiple social networks.

I believe we are now at a point where these data sets are large and detailed enough to offer important insights about our “real world” culture. This belief is based on two facts. First, it’s possible to get a good working image of a community by gathering data over just a few hours. While we make every effort to gather as much data as is realistically possible before making statements about a community, the effect of adding more data is additive: we add members to the community, but we do not fundamentally alter its shape.

To understand this effect, it is helpful to consider an analogy from astronomy. A more powerful telescope can yield a better, sharper image of a star formation, but it neither changes the shape of the star formation or our basic understanding of its structure. Better tools simply yield more detailed data. I believe we are at a point where we have enough data to begin to understand structures, and that more data will yield more detailed understanding — but not alter the fundamental shapes we are beginning to uncover. This line of thinking is helpful in comprehending “exclusions.”

On the subject of “false inclusions,” these are generally sussed out in the layout and review process, but all of the graphs I have produced have generated a limited number of false inclusions as a practically inevitable artifact of the process. As a result, any statements one may make about a specific individual based on this kind of analysis may or may not be valid: they should be viewed through the lens of the biases disclosed alongside the visualization. However, repeated experience has shown that removing false small number of false inclusions does not have a material effect on the community structure.

On the subject of “network bias” (I don’t use x, therefore conclusions are not valid), when comparing data from Facebook, Twitter, LinkedIn, AngelList and other sources, the same patterns of homophily are exhibited. While each network has its own bias (LinkedIn towards professionals, Twitter towards both youths and professionals, Facebook towards Grandparents), if we limit analysis to a given geography, we will inevitably see homophilic tendencies which are correlated in each network.

While it’s difficult to speak about this in detail yet, as obtaining full comparable data sets from each source is currently quite challenging, early investigations indicate that the same patterns are exhibited across all networks. This squares up with the notion that what we are really doing in examining these networks is but a proxy for real world relationships. Ultimately, these networks must converge into something that approximates this abstract, Euclidian reality, and as we get more data and correlate it, it’s likely that a unified data set will closely reflect the actual geometry of our communities.

Future Research Directions

This kind of analysis is forming the basis for the emergent field of “computational sociology,” which is currently being explored by a variety of researchers around the world. This work has a number of important implications, and poses questions like:

- What do we mean by diversity? If we should be looking to bridge networks, is race a helpful proxy for that function? Or should we be looking to develop new better measures of diversity?

- What is the nature of segregation? Is physical segregation a product of our social networks? Or is it a manifestation of them?

- What is the role of urban planning? Is our social fabric something that’s shaped by urban planning, or are our cities simply a manifestation of our social fabric?

- What kind of interventions might we undertake to improve a city’s health? Should they be based first on creating and improving relationships?

- What is creative capital and how can we maximize it? If creative capital is a byproduct of relationships between people with diverse backgrounds, then we should be able to increase creative capital by orders of magnitude by bridging networks together and increasing the number of relationships overall.

The level of detail we are able to extract from network data in cities is more detailed than any data source we have ever had, and can supplement many other indicators currently used to measure community health and make decisions about resource allocation. For example, the census may tell us someone’s race, family name and physical address, but it tells us very little about their participation in the social fabric. And if we believe that the city is primarily manifested as the sum total of social relationships, then clearly data surrounding social interactions is more useful than other attributes we may harvest.

Discerning Stable Structures vs. Topical Discussions

Likewise, many researchers have been using social network data like Tweets to characterize conversations around a topic; and it is true that by harvesting massive quantities of Tweets, one can discern trends, conversation leaders, and other insights. However, this type of analysis tends to be topical and shift a great deal depending on whether people are active online, and contains biases about why they might be active online. Regardless, this kind of research is well worth understanding, and may ultimately lead to a better understanding of network structure formation. However, I want to differentiate it from the kind of inquiry I am pursuing.

So, rather than “you are what you tweet,” I believe the truth is something more like “you tweet what you are.” Network follow structures tend to be very stable; while they do change and evolve over time, one interesting feature of the process I am using is that it tends to be very stable over time and the results of analysis are repeatable. That is to say, if I analyze Baltimore one day, and then repeat the analysis a month later, I will obtain a comparable result. This is not likely to be the case with semantic analysis as topics and chatter may change over the course of a few weeks.

Tracking (and Animating) Changes Over Time

Because network structure analysis is fundamentally labor intensive and somewhat difficult to compute, it’s difficult to automate and to perform on an ongoing basis. For example, it would be nice in Baltimore to know whether things are getting “better” or “worse.” Right now, only by taking periodic snapshots and comparing them can we get a sense for this. It would be helpful to be able to animate changes over time, and while this is theoretically possible, tools to do this have to be built by hand right now. My hope is to apply such tools to the process and dramatically increase our ability to monitor network structure in real time, and spot trends.

Ultimately, this will give us some ability to see our social structure change in near real-time, developing a sense as to whether interventions are having a positive effect, or any effect at all.

Healthy vs. Unhealthy Network Patterns – and Brain Development

Dr. Sandy Pentland, an MIT researcher who is perhaps the leading figure in the field of computational sociology, has suggested that there are certain patterns that characterize “healthy” networks, as well as patterns that characterize “unhealthy” networks.

Healthy networks are characterized by:

- Frequent, short interactions

- Broad participation by all nodes and meshing

- Acknowledgement of contributions

- A tendency to explore other parts of the network

By contrast, unhealthy networks exhibit the opposite patterns: broadcast vs. peer-to-peer interactions; fewer network connections; isolated subnetworks; a lack of exploration of other parts of the network.

Perhaps the most telling finding is that these patterns are not limited to just human networks, but also appear in other colony-based organisms, like bees. It appears that there are universal patterns of network health that apply to life in general.

The other important finding is that lack of network exploration affects brain development in young people. Specifically, young people who grow up in an environment where network exploration is not valued tend to exhibit structural changes in the brain which appear to dampen their desire to explore networks as adults. This produces a multi-generational effect, where children who grow up in isolated networks tend to persist in isolated networks, and to pass that on to their children.

This suggests that one possible intervention is to promote network exploration at an early age across the entire population. How we might do this is certainly open to discussion, but it seems to be a very powerful tool in breaking down divisions in our social networks.

What’s next?

As this project advances, we are gathering ever-larger datasets. This requires more and more computational power and distributed algorithms for visualization. If this is something you’re also working on, I would like to talk to you — please contact me. There are some interesting challenges in scaling this up but there are interesting opportunities emerging to apply these approaches!

Mapping Your City

We have a long list of places that we’re looking at mapping, and trying to prioritize opportunities. If you have a mapping project you would like us to consider, please contact us. We will try to get back to you as quickly as possible, but in general, we’re looking for projects that can make a serious social impact. It takes a serious amount of effort to generate these maps, so be thinking about possible partners who could potentially fund this work and help advance this science.